Memory management is a fundamental aspect of programming, essential to the stability and performance of applications. Among the challenges associated with managing memory is the phenomenon of memory leaks, which can significantly degrade the performance of an application or even cause it to crash. This article delves into what memory leaks are, their causes, how they can be detected, and methods to prevent them. Additionally, it includes practical coding examples and discusses how the use of SMART TS XL can enhance the detection, analysis, and prevention of memory leaks through advanced static analysis, flowchart building, and code quality improvements.

NEED TO FIX MEMORY LEAKS?

Table of Contents

What Are Memory Leaks?

A memory leak occurs when a program allocates memory from the heap but fails to release it back when it’s no longer needed. As a result, the memory is no longer in use by the program but cannot be reclaimed by the operating system or other processes. Over time, these unreleased blocks of memory accumulate, reducing the amount of available memory, which can lead to reduced performance and eventually to the program crashing if the system runs out of memory.

In managed languages like Java or C#, memory management is handled by the garbage collector, which automatically reclaims memory that is no longer referenced. However, even in these environments, memory leaks can occur if objects are still referenced inadvertently, preventing the garbage collector from freeing the memory.

Causes of Memory Leaks

Memory leaks are among the most pervasive and insidious issues in software development, silently degrading performance and destabilizing applications over time. At their core, memory leaks occur when a program allocates memory but fails to release it after the data is no longer needed. Unlike crashes or obvious bugs, leaks often go unnoticed during initial testing, only manifesting after prolonged use—when the application slows to a crawl or abruptly terminates due to exhausted system resources.

The impact of memory leaks can range from minor inefficiencies to catastrophic failures, particularly in long-running systems like servers, embedded devices, or mobile apps. In extreme cases, leaks can cause system-wide slowdowns, forcing users to reboot their devices or services to reclaim memory. Even in garbage-collected languages like Java or Python, where automatic memory management is expected to handle cleanup, subtle programming mistakes can still lead to leaks through lingering references or unclosed resources.

Understanding the root causes of memory leaks is essential for developers across all levels of expertise. Whether working with low-level languages like C++ that require manual memory management or high-level languages with garbage collection, programmers must adopt disciplined practices to prevent leaks. This article explores the most common sources of memory leaks, offering insights into how they occur and strategies to mitigate them. By recognizing these pitfalls, developers can write more efficient, reliable, and maintainable code—ensuring their applications perform optimally throughout their lifecycle.

Manual Memory Management Errors

In languages like C and C++, memory management is entirely manual. This means that every block of dynamically allocated memory using malloc, calloc, or new must be explicitly deallocated with free or delete. A memory leak occurs when developers forget to release this memory after it’s no longer needed. These omissions often arise from complex control flows, early returns, or exception handling that bypass deallocation calls. In addition to missing deallocation, improper reallocation, such as losing a pointer to allocated memory before freeing it, also leads to unrecoverable memory. Another major pitfall is the use of dangling pointers, which are references to memory that has already been freed. This can result in undefined behavior or hard-to-diagnose crashes. Developers must follow strict discipline and code review standards when dealing with manual memory management. Tools like Valgrind, AddressSanitizer, and Clang’s built-in checks are essential in helping track allocations and ensure that every malloc or new has a corresponding free or delete. In critical systems programming, resource leaks caused by manual memory errors can degrade performance or cause the application to behave unpredictably over time.

Unbounded or Growing Data Structures

Collections that grow over time without proper limits are a common source of memory leaks, especially in long-running applications. Data structures such as lists, queues, dictionaries, and caches are often used to store objects for temporary processing or lookup. If old entries are never removed or expired, the structure continues to consume memory even after the data becomes irrelevant. For example, a logging system may append every message to a list that is never cleared, or a caching layer might store query results indefinitely without any expiration strategy. In high-volume applications, these structures can grow to hold thousands or millions of objects, eventually causing out-of-memory conditions. Developers should implement bounds, cleanup intervals, or least-recently-used (LRU) eviction policies to ensure that data structures do not grow unchecked. In garbage-collected languages, this type of leak is particularly tricky because the memory is technically reachable, so it won’t be collected. Monitoring collection size and establishing controls to prune old or unused entries helps prevent slow memory creep that could otherwise go unnoticed during development or low-scale testing.

Circular References in Garbage-Collected Languages

Languages with garbage collection like Java, Python, and JavaScript simplify memory management by automatically cleaning up unreachable objects. However, circular references pose a subtle challenge. When two or more objects refer to each other and are no longer in use by the application, their mutual references prevent the garbage collector from determining that they are safe to remove. Although modern garbage collectors have improved their ability to detect these cycles, not all environments or collector types handle them effectively. Additionally, closures or lambdas in these languages may capture parent scope variables unintentionally, which keeps objects alive beyond their intended lifecycle. This problem often shows up in applications with reactive programming, event systems, or object graphs that form tight loops. Breaking these cycles manually by nulling references or using weak references is the recommended approach. Some languages also offer specialized data structures or context managers that minimize the risk of forming strong reference chains. Without attention to this detail, circular references can silently accumulate memory, leading to performance degradation and difficult-to-trace leaks.

Unclosed Resources

Applications that interact with system resources such as files, database connections, network sockets, or streams must ensure these resources are explicitly released. Unlike regular objects that can be garbage collected, these resources are often tied to operating system handles and require manual or structured cleanup. If a file is opened but never closed, or a database connection is left hanging, it not only consumes memory but also reserves file descriptors, socket connections, or database pool slots. Over time, this can result in file handle exhaustion or blocked connection pools. Modern programming languages often offer constructs like try-with-resources in Java, using in C#, or context managers in Python to guarantee that resources are closed even when exceptions occur. Developers who ignore or bypass these constructs risk introducing silent but damaging resource leaks. In large systems, even a small percentage of unclosed resources can cause system-wide issues, especially when applications scale under concurrent load. Tracking and closing resources reliably must be a fundamental practice in every development workflow.

Static and Global Variables

Static and global variables are designed to persist for the lifetime of an application, which makes them inherently risky if not managed carefully. When these variables hold large objects, temporary data, or references to UI components or session-specific information, they prevent the garbage collector from reclaiming that memory even after it is no longer useful. A static cache that is never cleared, or a global service that retains old results indefinitely, slowly consumes more memory over time. This issue is especially problematic in systems that handle user sessions, transactions, or batch jobs where different contexts are processed repeatedly. If the static field accumulates state from each instance and never resets, the memory cost scales with usage. Developers should limit the use of static variables to constants or small utilities that are guaranteed to remain relevant throughout the application’s lifecycle. If persistent storage is required, mechanisms for periodically trimming or invalidating stored values should be implemented. Routine memory audits and profiling can also help uncover unexpected memory growth caused by improperly scoped static references.

Thread-Related Leaks

Multithreaded applications introduce unique challenges for memory management, particularly around thread-local storage and long-lived threads. When data is stored in thread-local variables but never cleared, the data remains associated with the thread for as long as it exists. This becomes a memory leak if the thread persists longer than necessary or is reused indefinitely in a thread pool. Additionally, background threads that are blocked, sleeping, or waiting on events may hold onto objects long after they are needed. If a thread references a class that was meant to be ephemeral, such as a request object or temporary buffer, that class cannot be collected until the thread is terminated. In cases where threads are poorly managed or abandoned, these leaks persist silently and grow as the system scales. Best practices include cleaning up thread-local variables explicitly, ensuring that long-running threads release unnecessary references, and designing worker threads to reset their context between tasks. Thread pools should also be monitored for size and memory consumption to detect when idle threads are retaining more data than expected.

Third-Party Library Issues

Not all memory leaks originate from your own code. Libraries and frameworks, especially those that interface with graphics, audio, or external hardware, can contain their own leaks or expose APIs that require explicit cleanup. If these APIs are not used correctly, such as failing to call a dispose() or shutdown() method, the resources they manage will remain allocated. This is particularly common in older libraries, or in newer ones that abstract away complexity but don’t document lifecycle requirements well. In some cases, libraries implement their own caching or resource pooling strategies, which can retain objects in memory longer than anticipated. These caches may be tunable or completely opaque. Additionally, integrating a library might inadvertently keep references to your application’s objects—such as registering a callback that is never removed—which prevents your objects from being collected. Developers must carefully review the documentation of any third-party code they include and monitor memory usage over time to detect leaks introduced by libraries. Testing third-party integrations under load or using profiling tools helps catch these problems early.

Operating System Handles Leak

Memory leaks aren’t limited to heap allocations. Applications also rely heavily on operating system handles such as file descriptors, GUI handles, sockets, and semaphores. Each of these resources has a finite system-level limit. When handles are not closed properly, the system eventually runs out of resources, even if memory appears to be available. For example, failing to close a file descriptor on Linux leads to errors like “Too many open files,” which can halt services unexpectedly. In Windows environments, leaked graphical device interface (GDI) handles can prevent new windows or UI elements from rendering. Handle leaks are particularly hard to diagnose because they may not show up in traditional memory profilers. Monitoring tools specific to your platform, such as lsof for Unix or Task Manager in Windows, can reveal abnormal handle usage. Developers must audit their resource handling routines carefully and ensure that every allocation has a corresponding release. Using RAII patterns or scoped resource managers can help enforce correct behavior in both high-level and low-level systems.

Event Subscriptions and Callbacks

Event-driven systems are prone to memory leaks when components register for events but are never unregistered. This is especially true in applications with long-lived event publishers like UI frameworks, messaging buses, or reactive pipelines. When a listener is registered and not removed, the publisher retains a reference to that listener, keeping the entire object graph alive. For example, if a UI widget listens to updates from a shared model but is never unregistered when removed from the screen, the widget stays in memory. In JavaScript applications, DOM nodes attached to global events are a frequent cause of leaks when nodes are removed visually but not detached programmatically. The solution lies in symmetrical lifecycle management. Every registration must be paired with an explicit deregistration. Some frameworks support weak event patterns or auto-cleanup hooks to minimize the burden on developers. However, relying on these alone is risky unless you confirm their behavior during teardown. Code reviews and testing should always include verification that event subscriptions are terminated properly.

C++ Smart Pointer Misuse

C++ smart pointers like unique_ptr, shared_ptr, and weak_ptr are powerful tools for automated memory management, but when misused, they can cause subtle memory leaks. A common issue arises when shared_ptr instances form circular references. Since shared pointers use reference counting to manage lifetimes, objects that point to each other with shared ownership will never reach a count of zero, preventing deallocation. This problem is often found in parent-child structures or bidirectional relationships. Developers must use weak_ptr in one direction to break the cycle and allow proper cleanup. Another issue is mixing raw pointers with smart pointers. If raw pointers are used to hold references that are not managed carefully, the benefits of smart pointers are diminished. Some developers mistakenly allocate objects using new and forget to wrap them in a smart pointer, losing track of ownership. Following RAII (Resource Acquisition Is Initialization) principles is essential to ensure resources are released predictably. By designing with smart pointer ownership in mind and avoiding hybrid models of memory management, developers can greatly reduce the chances of introducing leaks in modern C++ code.

Detection of Memory Leaks

Memory leaks are often elusive because they build up slowly and do not always cause immediate errors. Unlike crashes or syntax bugs, leaks may only appear after hours or days of application uptime, especially in systems with persistent workloads or high concurrency. Detecting them requires a combination of observation, instrumentation, and tooling. Below are practical and effective strategies for identifying memory leaks in real-world applications.

Monitor Memory Usage Over Time

One of the first signs of a memory leak is a consistent upward trend in memory usage during normal operation. This can be observed using simple system tools like Task Manager on Windows, top or htop on Linux, or container orchestration dashboards in Kubernetes environments. Memory usage should fluctuate with workloads but eventually stabilize. If it continues to climb over time—especially during idle periods or after repetitive tasks—it is a strong indicator that memory is not being released. In production systems, memory usage graphs can be tracked using system metrics or infrastructure monitoring tools. Correlating usage spikes with specific application events or user interactions can help narrow down the leak’s origin. Early detection through periodic monitoring helps prevent crashes and performance degradation.

Use Heap and Memory Profilers

Heap profilers are essential tools for visualizing memory usage and identifying what objects are consuming space in the application. These tools allow developers to take snapshots of memory at different points in time, then compare them to detect which objects are increasing without being released. In Java, VisualVM and Eclipse Memory Analyzer are commonly used. .NET developers often use dotMemory or CLR Profiler, while C/C++ applications benefit from Valgrind or AddressSanitizer. Python offers tools like objgraph and memory_profiler. Heap profilers display reference chains, retained memory sizes, and allocation trees, helping trace how memory is being held. For complex applications, combining snapshots with filtering and grouping logic can highlight problematic areas. When used in conjunction with live debugging, profilers allow real-time investigation of objects that stay in memory longer than expected. This insight is critical in diagnosing slow leaks that elude traditional logs or system metrics.

Log Object and Collection Growth

Logging the size of key data structures or object pools over time is a lightweight yet powerful technique to detect leaks during development and testing. Developers can instrument the code to periodically report the length of collections such as lists, maps, queues, or session registries. In scenarios where these data structures are expected to grow temporarily and then shrink, monitoring their size can reveal whether they ever return to baseline. For instance, if a message queue processes tasks but its internal list size never decreases, the objects may be accumulating due to logic gaps. This is particularly useful when profiling is not feasible or when leaks are suspected in specific functional areas. By embedding these logs alongside task execution or user flows, developers gain visibility into abnormal object retention patterns. Automated threshold checks can be added to detect and alert on unchecked growth, allowing early mitigation of memory leaks before they affect performance.

Analyze Garbage Collection Behavior

Garbage-collected languages like Java, Python, and C# offer useful indicators of memory pressure through their garbage collection logs. When the system experiences frequent GC cycles with minimal recovery of memory, it typically signals that objects are being retained unnecessarily. Analyzing these logs reveals how often major collections occur, how much memory is reclaimed, and how heap usage changes over time. In Java, tools like GCViewer or built-in JVM logs (-XX:+PrintGCDetails) provide insights into how effectively the garbage collector is performing. Excessive GC activity can degrade application performance even if memory isn’t fully exhausted yet. If the garbage collector is running frequently but unable to reclaim space, developers should investigate object references and allocation paths. Patterns such as rising old generation memory usage and long GC pause times often point to lingering objects that the system incorrectly assumes are still in use. Reviewing these patterns regularly is an effective way to detect silent memory retention in managed environments.

Track Allocation Hotspots

Profiling tools can highlight functions or modules that are responsible for the highest number of object allocations. Allocation hotspots are not always a leak by themselves, but when certain areas consistently allocate large numbers of objects that never get collected, it becomes a red flag. Memory profilers can be configured to show allocation counts and stack traces leading to those allocations. In languages like Java, jmap and JProfiler allow developers to identify which classes and methods are producing the most memory usage. For native applications, Valgrind’s massif tool is helpful in tracing allocation peaks. Tracking these hotspots allows teams to inspect the design of high-churn functions or loops. A service that repeatedly allocates memory inside a polling thread, without ever releasing references to those objects, can lead to a slow-growing memory footprint. Developers can optimize or restructure such code paths to ensure that temporary objects are released after their purpose is served. By addressing hotspots early, long-term leaks are minimized before they accumulate across user sessions or service cycles.

Observe Application Behavior Under Load

Load testing is a reliable way to surface memory leaks that remain hidden under typical development workloads. By simulating high concurrency, sustained traffic, or repeated usage patterns, developers can observe how the application behaves under stress. Memory leaks often reveal themselves during these scenarios through increasing memory consumption, slower response times, and eventually out-of-memory errors. Load test results should be paired with memory monitoring and logs to identify whether resource usage stabilizes after the load or continues to rise. Tools like JMeter, Locust, and k6 help simulate load, while system and application metrics provide feedback loops. This method is especially useful for identifying leaks in authentication flows, file processing, data streaming, or any code paths that execute per request. Load testing in a staging or pre-production environment allows teams to uncover leaks that would otherwise manifest in production, where detection becomes riskier and remediation more disruptive.

Monitor Thread or Handle Counts

Memory leaks are not limited to object heap usage. System-level resources such as threads, file descriptors, sockets, and GUI handles also consume memory and must be explicitly released. Leaking these resources can exhaust OS limits, resulting in system instability or application crashes. Developers should monitor thread pools, socket states, and open file handles to detect abnormal retention. Tools like lsof, netstat, or platform-specific resource monitors help track open resources at runtime. For example, if an application creates threads for handling tasks but never terminates them properly, memory usage will grow in parallel with thread count. Similarly, unclosed files or sockets can persist in the background, accumulating system-level overhead even if they are idle. These types of leaks are particularly insidious in long-lived services and servers with high throughput. Proper lifecycle management of these resources—alongside automated cleanup and shutdown hooks—ensures that system memory is reclaimed promptly and safely.

Use APM and Runtime Monitoring Tools

Application Performance Monitoring (APM) tools provide continuous visibility into memory usage, garbage collection behavior, and object lifetimes across environments. Solutions like New Relic, Dynatrace, AppDynamics, and Datadog offer integrated memory dashboards and anomaly detection for live applications. These platforms can alert teams when memory usage exceeds thresholds, or when specific services display unusual behavior under load. Some tools also include historical comparisons and retention analysis, helping correlate memory trends with deployments or traffic spikes. In production environments where profiling is too intrusive, APM tools serve as the primary lens for spotting memory leaks. They help trace memory-intensive requests, identify slow endpoints, and highlight services that retain objects longer than expected. Many APM platforms also support heap dump triggers or object sampling, providing just enough diagnostic data without impacting runtime performance. Integrating APM solutions early in the development lifecycle enables proactive leak detection and accelerates root cause analysis when issues do arise.

Compare Memory Snapshots Before and After Tasks

A straightforward yet effective technique for detecting memory leaks is to take memory snapshots at key moments in the application lifecycle—before and after executing major operations. For example, if your application loads user sessions, processes large datasets, or runs batch jobs, capturing a snapshot of the heap before the operation and another afterward allows you to analyze what objects were created and which ones remain. Ideally, temporary objects should be released after the task is complete. If large volumes of memory remain occupied with no obvious reason, it may indicate that objects are being held unintentionally. Heap analysis tools make it possible to compare snapshots and highlight which objects have increased in count or size. This delta-focused investigation is particularly effective for spotting leaks in isolated modules or features. When paired with logs, metrics, and allocation tracking, snapshot comparisons can lead directly to the code paths that are responsible for leaking memory.

Prevention of Memory Leaks

Preventing memory leaks is as important as detecting them. While tools and diagnostics can help uncover leaks after they appear, robust design practices, disciplined resource management, and adherence to language-specific conventions can prevent most leaks from occurring in the first place. Proactive prevention reduces debugging time, improves application stability, and ensures scalability as systems grow. Below are proven techniques and architectural habits that minimize the risk of memory leaks across different programming environments.

Use Structured Resource Management Constructs

Languages like Java, C#, and Python provide structured constructs for automatic resource cleanup. These include try-with-resources, using statements, and context managers. When used correctly, they ensure that resources such as files, sockets, and database connections are closed even if exceptions occur. Developers should favor these constructs over manual close calls, which are prone to omission. In unmanaged environments like C and C++, using RAII (Resource Acquisition Is Initialization) guarantees that resources are released when objects go out of scope. These patterns reduce the chance of forgetting to clean up and lead to safer, more predictable code. Teams should standardize on these constructs and treat any manual resource management as a code smell that requires special scrutiny during reviews.

Deregister Event Listeners and Callbacks Promptly

Event-driven code requires explicit unsubscription of listeners when the object registering them is no longer needed. Failing to do so leads to retained references and memory that cannot be freed. In systems with GUI elements, real-time data updates, or custom event buses, every registration should be mirrored with a deregistration. This practice is critical in modular or dynamic UI frameworks where components are frequently mounted and unmounted. One common mistake is registering a listener during initialization but failing to remove it during destruction or unmounting. Memory leaks accumulate when components are destroyed visually but remain referenced logically. Developers should centralize event subscription logic and ensure teardown routines are triggered consistently. Where available, use weak event patterns or framework-provided lifecycle hooks to automate cleanup. Additionally, adopt unit and integration tests that validate the removal of listeners after component deactivation or page unloads.

Limit Use of Static and Global References

Static fields and global variables are often used for convenience, but they come with the cost of permanence. Any object referenced from a static context remains in memory for the entire runtime of the application, regardless of whether it is still needed. This becomes especially dangerous when large collections, session data, or UI elements are stored statically. Over time, these objects accumulate and create unintended memory retention. To prevent this, developers should use static fields only for immutable constants, utility methods, or lifecycle-managed singletons. Avoid storing context-dependent or heavy objects statically. When global references are required, pair them with expiration logic, eviction policies, or manual nulling strategies. During shutdown or component teardown, statically held resources should be explicitly cleared. Static usage should also be reviewed during pull requests to ensure that temporary or transactional data does not end up in long-lived storage unintentionally.

Break Circular References When Necessary

In garbage-collected environments, circular references can still prevent memory from being reclaimed. This is particularly common when using closures, linked data structures, or bidirectional relationships. Developers should be cautious about forming cycles between objects that reference each other. In C++, use weak_ptr to break cycles formed by shared_ptr. In Java or Python, review object graphs and use weak references where appropriate to allow collection of otherwise reachable objects. When using closures or anonymous classes, minimize the scope of captured variables. Avoid referencing entire class instances when only a method or small piece of state is required. Closures that inadvertently capture large objects are a frequent source of leaks in async or reactive code. Regularly auditing these patterns and testing memory behavior during development helps prevent circular references from persisting beyond their usefulness.

Use Memory-Efficient Data Structures and Patterns

Choosing the right data structure can help avoid unnecessary memory retention. For example, using WeakHashMap in Java or WeakKeyDictionary in Python ensures that keys or values are automatically discarded when no longer in use. Avoid defaulting to unbounded lists or maps when a more suitable structure—like an LRU cache or bounded queue—can be applied. In cases where large datasets must be retained temporarily, segment the data and release chunks periodically to reduce memory pressure. Additionally, avoid premature optimization that leads to caching everything “just in case.” Implementing clear policies for expiration, eviction, or size limits helps the system manage memory better without developer intervention. Profiling during design, not just after leaks occur, helps validate assumptions about data retention and structure size under realistic loads.

Dispose of Unused Objects Explicitly

Although garbage-collected languages free memory automatically, the timing of collection depends on object reachability. If references remain, the memory stays allocated. Developers can speed up release by explicitly setting variables to null (in Java) or None (in Python) after their use is complete. This signals to the garbage collector that the object is no longer needed. This technique is especially useful in long-lived scopes, such as background workers, long loops, or session handlers, where objects would otherwise stay referenced for an extended period. In performance-critical applications, being intentional about object lifecycle can significantly reduce peak memory usage. However, this should be used judiciously to avoid cluttering code or introducing bugs. As a principle, ensure that variables holding large or sensitive data are cleared as soon as their task is finished.

Adopt Defensive Allocation Strategies

Memory leaks can be reduced by allocating memory only when it’s truly needed. Avoid pre-allocating large structures unless required for performance. Use lazy initialization techniques where memory is allocated just-in-time and released as soon as the object’s task is complete. Track memory usage through scoped structures and batch-process large datasets rather than loading them entirely into memory. In some environments, pooling can also cause memory leaks if objects are never returned to the pool. Ensure that any custom memory management logic includes timeouts or leak detection logic. Developers should adopt the mindset that every allocation should come with a plan for deallocation, especially in performance-sensitive or resource-constrained systems.

Incorporate Memory Auditing into CI/CD

Prevention isn’t complete without ongoing monitoring. Integrating memory audits into the CI/CD pipeline helps detect regressions early. Tools like automated profilers, allocation counters, or synthetic load tests can be scheduled to run before each deployment. These systems track key metrics such as heap size, GC frequency, object counts, and resource handles. When thresholds are exceeded or deviations from baselines are detected, teams are alerted before the changes reach production. This proactive approach turns memory management into a continuous practice, rather than a reactive fix. Teams should also include memory-related KPIs in their quality criteria and conduct regular code reviews focused on lifecycle management. Establishing a memory hygiene culture ensures that prevention is built into the development process.

Unit Testing for Memory Leaks

While memory leaks are typically associated with runtime behavior and long-term application performance, they can and should be caught during testing—especially through targeted unit tests. Integrating memory verification into unit testing workflows enables teams to identify leaks earlier in the development process, before they escalate in production. Unit tests designed for memory safety help ensure that object lifecycle boundaries are respected, resources are released correctly, and operations complete without retaining unintended references. Although unit testing alone cannot uncover all leaks, it is a critical first line of defense that reinforces good engineering discipline and encourages leak-aware design.

Design Tests Around Allocation and Cleanup Behavior

Effective unit tests for memory management focus not just on functional correctness, but also on the lifecycle of objects. Each test should validate that temporary objects are created, used, and discarded appropriately. When dealing with custom caches, session managers, or service factories, write tests that simulate object creation and verify that nothing persists unnecessarily once the operation completes. This often involves invoking the same logic multiple times and comparing memory usage or object counts between runs. If the memory footprint increases with each invocation, it may indicate a leak. For systems that handle large payloads or high object churn, include teardown logic in the test to enforce cleanup. In some environments, instrumenting test code with lightweight allocation counters or reference checks helps reveal objects that fail to go out of scope. These assertions ensure that memory use remains predictable and self-contained within the scope of the test.

Use Leak Detection Libraries and Utilities

Modern programming ecosystems provide libraries that extend unit test frameworks with memory leak detection capabilities. For C++, tools like Google Test can be paired with Valgrind or AddressSanitizer to track allocations during test execution. Java developers may use tools like junit-allocations or OpenJDK Flight Recorder in test mode to observe retained memory. Python offers objgraph, tracemalloc, and gc module inspection features to trace object growth between assertions. These libraries can be incorporated into standard test suites and used to set expectations around object counts or memory changes. For example, a test may assert that no additional instances of a class remain after a method completes. By wrapping test cases in controlled allocation scopes or memory snapshots, developers can validate that no hidden references persist. These tools not only catch memory leaks early, but also make it easier to reproduce them consistently, which is often difficult during full application profiling.

Simulate Repetitive Usage and Measure Stability

Memory leaks often occur in repetitive or long-running operations. To detect these patterns through unit testing, simulate repeated execution of the same function or feature within a loop. This approach can surface gradual memory growth that would not be obvious in a single test pass. For instance, a caching function that fails to evict stale entries may pass under isolated conditions but fail under sustained repetition. Structure your tests to execute dozens or hundreds of iterations, and measure memory or object state after completion. Some testing frameworks allow for fixture-level setup and teardown hooks that enable resource checks between cycles. Including these loops as part of test automation helps ensure that memory use remains consistent over time. This is particularly valuable in services that must maintain stability over long sessions, such as background processors, API endpoints, or batch jobs. By observing whether memory remains stable after repeated execution, developers gain early confidence in the robustness of their memory management.

Assert Proper Resource Release in Test Teardowns

Unit tests should always return the environment to a clean state, and this includes memory. In addition to functional assertions, test teardown methods are an ideal place to verify that temporary resources have been released. Whether you’re dealing with file streams, database connections, or mock service instances, teardown blocks can include explicit dispose, close, or null operations. These patterns reinforce the principle that all resources must be released when the task completes. Where applicable, also assert that key references are no longer reachable or that finalizers have been triggered. This practice encourages developers to write more self-contained code and reduces test pollution across suites. When teardown code includes validation of object lifecycles, it becomes much easier to detect regressions or behavior changes that introduce memory leaks. Integrating memory assertions into test cleanup also improves reliability in parallel or continuous testing environments, where test isolation is essential

Coding Samples

Here are some coding examples that demonstrate common memory leaks and their resolutions:

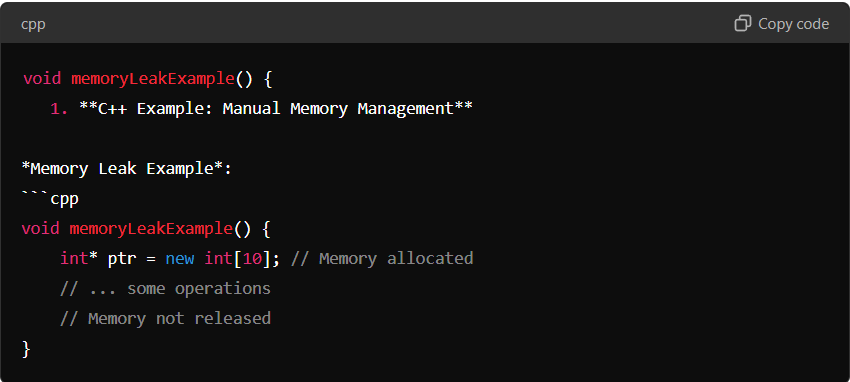

C++ Example: Manual Memory Management

In this example, memory is allocated using new[] to create an array of integers. However, the memory is not released because there is no delete[] call to free it, leading to a memory leak.

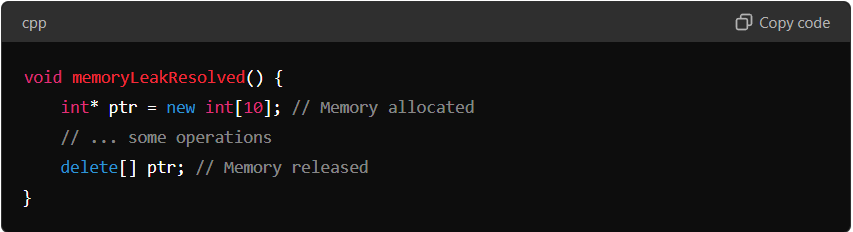

Resolved Example:

To resolve the leak, the allocated memory is properly freed using delete[]. This ensures that the memory is returned to the system once it’s no longer needed.

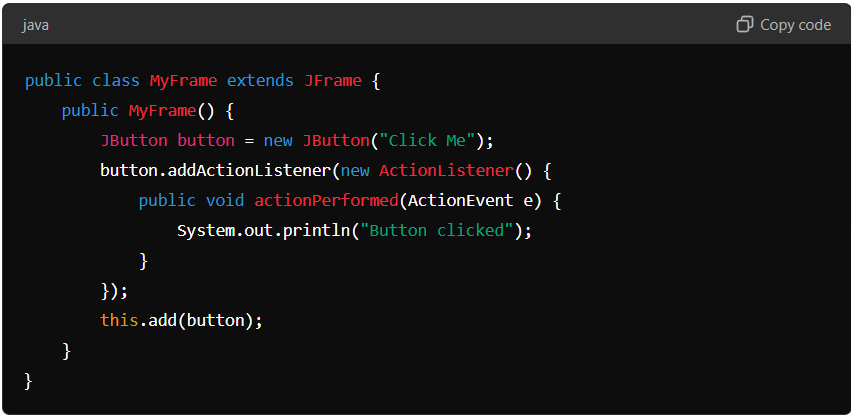

Java Example: Listener Memory Leak

Memory Leak Example:

In this example, an anonymous inner class is used to create an ActionListener for a button. However, if the button is removed or the frame is closed without removing the listener, the listener may cause a memory leak by keeping the button or frame in memory.

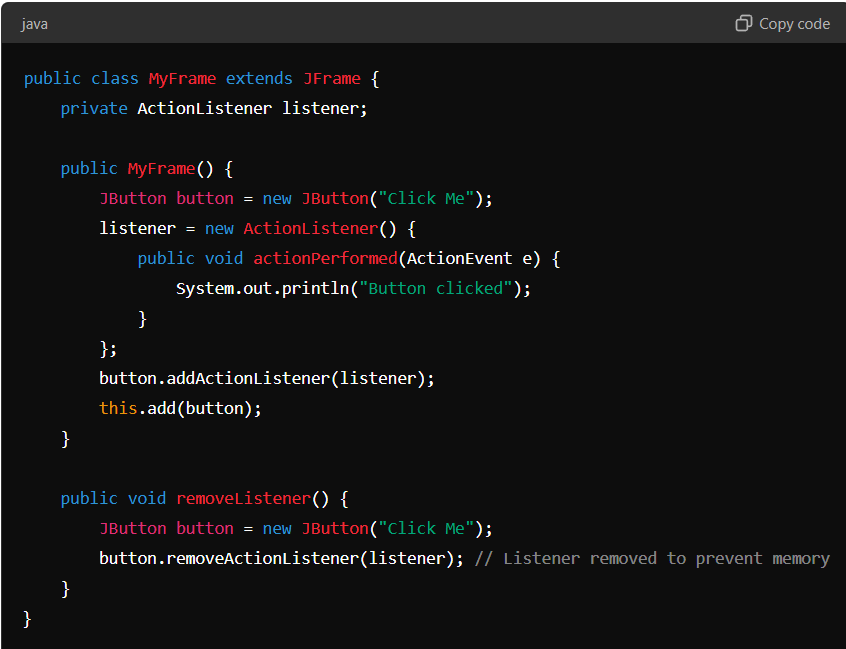

Resolved Example:

By keeping a reference to the listener and explicitly removing it when the button is no longer needed, the potential for a memory leak is mitigated.

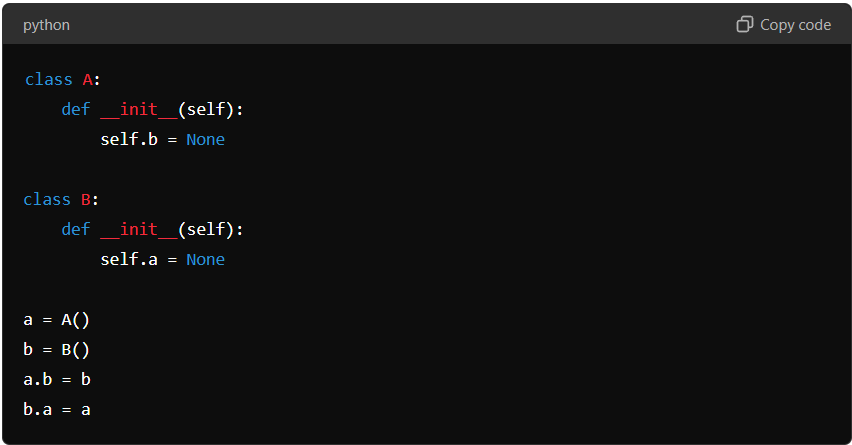

Python Example: Circular Reference

Memory Leak Example:

In this example, a and b hold references to each other, creating a circular reference. This can prevent Python’s garbage collector from freeing the objects, causing a memory leak.

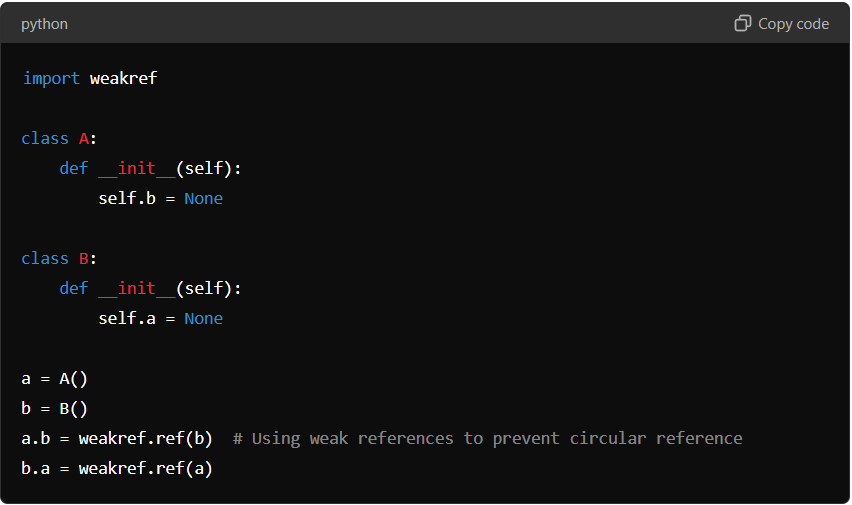

Resolved Example:

By using weakref, the circular reference is broken, allowing the garbage collector to reclaim the memory when the objects are no longer in use.

SMART TS XL: A Tool for Effective Memory Leak Detection and Resolution

SMART TS XL can significantly enhance the process of detecting and resolving memory leaks. Here’s how this tool can be integrated into your development workflow:

Static Code Analysis: SMART TS XL offers advanced static analysis capabilities, identifying potential memory leaks by analyzing your code. Unlike other tools, it provides deeper insights and more accurate detection of patterns that can lead to memory leaks.

Flowchart Building: SMART TS XL can automatically generate flowcharts that visualize the memory allocation and deallocation processes within your code. This feature is particularly useful for understanding complex memory management scenarios and identifying where leaks might occur.

Impact Analysis: With SMART TS XL, you can perform impact analysis to see how changes in one part of the code might affect memory management in other areas. This is especially beneficial in large projects where even minor changes can have significant repercussions on memory usage.

Code Quality Improvement: Beyond just detecting leaks, SMART TS XL provides suggestions for improving overall code quality, helping you write more robust, maintainable, and leak-resistant code.

By incorporating SMART TS XL into your development process, you can significantly reduce the risk of memory leaks and ensure that your applications remain stable and efficient. Whether you’re dealing with manual memory management in C++ or handling object references in managed languages like Java and Python, SMART TS XL offers the tools you need to maintain high standards of memory management and overall code quality.